Work at CCG ¶

For the project UH4SP, CVIG contributes to automatic identification of driver and vehicle identification using computer vision and machine learning techniques. The vehicle identification has two parts axle counting and vehicle recognition. The automatic driver identification is the face recognition using mobile application.

Axle detection and counting¶

To ensure the type of vehicle entering the industry, axle count is an important information. Except the normal vehicle like car, van and some kind of trucks, most of the vehicles entering the industry has different number of axles. The axle count information will be helpful in identifying the right type of vehicle.

The axles are the circular patterns and in image processing field, Hough transform is a pattern recognition technique used to identify shapes that can be parameterized, such as straight lines, circles, and ellipses. The Hough transform algorithm and its variations have been used in numerous pattern recognition applications . It is used to detect circular and sub-circular features from aerial photographs, representing archaeological monuments. We use the generalized Hough transform to detect the presence of circular patterns representing wheels, as the transform is robust to missing data and noise in the image. The axle detection and counting process is displayed below.

- The green box in the video is the ROI - Region Of Interest. To make the application computationally efficient,I choose apply axle detection only in ROI.

- This application detects axle based on Houghcircle Transform.

- This system also performs well in real time detection and counting.

Ps : Due to work restrictions only preliminary results of two different videos are shown below.

The details of face recongition and vehicle classification will be added soon.

import cv2

import numpy as np

Video 1¶

import io

import base64

from IPython.display import HTML

video = io.open('outpy.mp4', 'r+b').read()

encoded = base64.b64encode(video)

HTML(data='''<video width="640" height="420" alt="test" controls><source src="data:video/mp4;base64,{0}" type="video/mp4" /></video>'''.format(encoded.decode('ascii')))

Video 2¶

from IPython.display import HTML

video = io.open('car2.mp4', 'r+b').read()

encoded = base64.b64encode(video)

HTML(data='''<video width="640" height="420" alt="test" controls><source src="data:video/mp4;base64,{0}" type="video/mp4" /></video>'''.format(encoded.decode('ascii')))

Research Intern : Fish Detection Tracking and Recognition ¶

This is my internship work with LIED lab, University Paris 7 and funded by European project http://assisi-project.eu/.

Description :

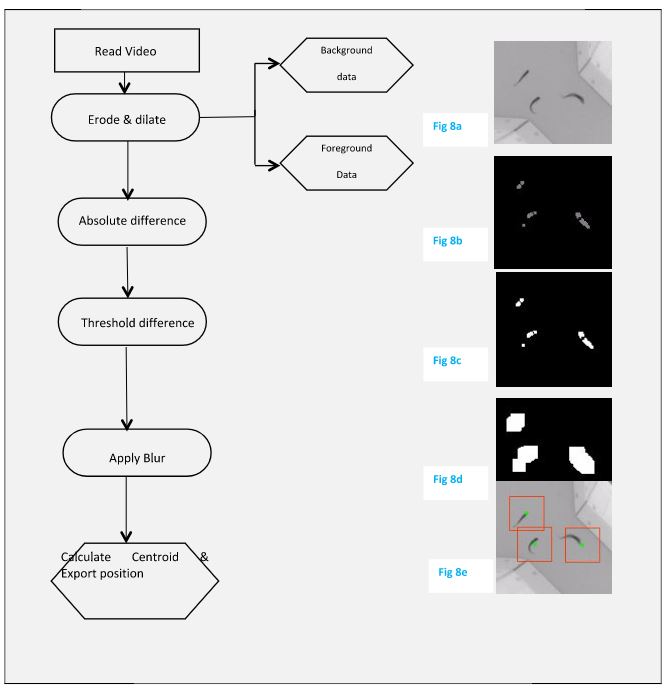

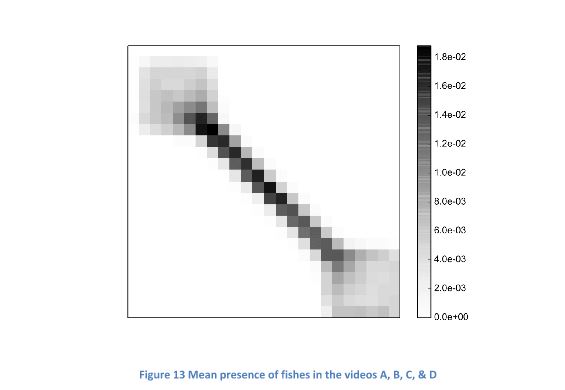

Biologist likes to study the behavior of different fishes and species in groups and as an individual. Keeping this in context, we developed this method for studying each fish individually and in a group. The aim is to detect, track and identify the fish movement in a video. The experimental setup can be seen in the below video. To achieve this, we employed the computer vision and machine learning techniques. Each video is 20 mins long at 60 FPS. For detection and tracking, we followed the process shown the below figure. The bounding box of each detected fish in a frame is saved and manually annotated for first 8 mins. The spatial coordinates of each detected fish are used to study the mean presence in experimental setup and inter individuality distribution of fishes in groups. In machine learning part, we used the bag of words features and Histogram of gradients as a feature and trained it on a single layer neural network. In the 8 mins, we have 8 x 60 secs x 60 frame per second X 5 fishes = 1,44,000 samples (But not really 144000 actually close to 140000). We used 80 % data to train and tweak the model and 20 % to Validate it. The major problem that we faced is occlusion of fishes. During occlusion, the position of two or more fish becomes one and the bounding box crop the mix of all the occluded fishes. This leads to a reasonable percentage of error.

from IPython.display import HTML

video = io.open('fish1.mp4', 'r+b').read()

encoded = base64.b64encode(video)

HTML(data='''<video width="640" height="420" alt="test" controls><source src="data:video/mp4;base64,{0}" type="video/mp4" /></video>'''.format(encoded.decode('ascii')))

Face Recognition and clustering¶

from IPython.display import HTML

video = io.open('finaloviya.mp4', 'r+b').read()

encoded = base64.b64encode(video)

HTML(data='''<video width="640" height="420" alt="test" controls><source src="data:video/mp4;base64,{0}" type="video/mp4" /></video>'''.format(encoded.decode('ascii')))

Please click here to see good qulality video Video. Clic Open it in VLC